Yes, it's true. We can screw up. We're pros at it!

In this chapter, we are swept away by tornadoes and dropped off somewhere in Jersey. It's a serious fear for those who live in the great American mid-west. For those who aren't following our social networks, we made the switch from Apache to NGinx. Why do you ask? Because after doing a lot of research combined with the fact that a lot of higher end programs we use have migrated to the Nginx platform. It seemed reasonably powerful to give it a go. For the most part, the migration was a success! But like all things new you tend to get a little crazy with configuration and sometimes screw up!

To those who were wondering why things were silent for a bit. Well, there you go! Just table-flipping the website once again! Would you like to know more?

Apache <-> Nginx

We guess that we should start with the pros of the migration huh? And by what benefit does one blogger gain from table flipping the very core of what makes their website work?

Memory saving.

I would say that out of everything a major 'pro' of the whole process of switching from Apache to Nginx is the amount of ram served on our VPS. Now bear in mind our VPS isn't the most beefiest system money can buy. We could buy something with more cores, more ram, etc. However, we are just a blog. A blog that takes no shit or money from corporations.

WebP

This website uses webp files with the usage of EWWW image optimizer plugin. However, it felt like every-time we made the recommended changes for apache to do the heavy lifting on checking for the extension. Apache simply ignored us. Forcing us to use the slower PHP/JS variant of checking. But adding this to our configuration file

location ~* ^.+\.(png|jpe?g)$ {

expires 30d;

add_header Cache-Control "public, no-transform";

add_header Vary Accept;

try_files $uri$webp_suffix $uri =404;

}

Worked first try! Which is bad-ass!

Security.

This is a double-edged sword. But we felt it was necessary.

ssl_prefer_server_ciphers on; ssl_ciphers EECDH+AESGCM:EDH+AESGCM;

This site does have its own certificate for SSL transmission. We get to determine what kind of protocols that we will accept through SSL. By reducing the ciphers to only modern systems only ensures the transport cannot be hacked easily by a man-in-the-middle attack. But in doing so we're locking out older versions of internet explorer which may not be able to handle the more modern cipher system that the rest of the world expects.

GZip only when necessary.

gzip_vary on; gzip_proxied any; gzip_comp_level 6; gzip_buffers 16 8k; gzip_http_version 1.1; gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

This is another super-cool thing about Nginx in the fact that unlike Apache it only GZips when dealing with a browser that cannot understand the HTTP/2 protocol and has to fallback to HTTP/1.1 . You don't need to gzip HTTP/2 because technically it's already being done as it prepares the packets for a sustained binary transfer. We also keep the benefits of when we ran Apache by letting the web-server do all of this instead of relying on stupid WordPress caching plugins which got in the way of making our website work %80 of the time.

The screw-up.

Among many of the security upgrades, we found ourselves with this one command. This was being repeated over and over again all throughout the Nginx help guides out on the net.

add_header X-Robots-Tag none;

The way we were thinking of this command is to simply do nothing with X-Robots and fallback to whatever my "ROBOTS.TXT" has for the rules of engagement.

The downward spiral.

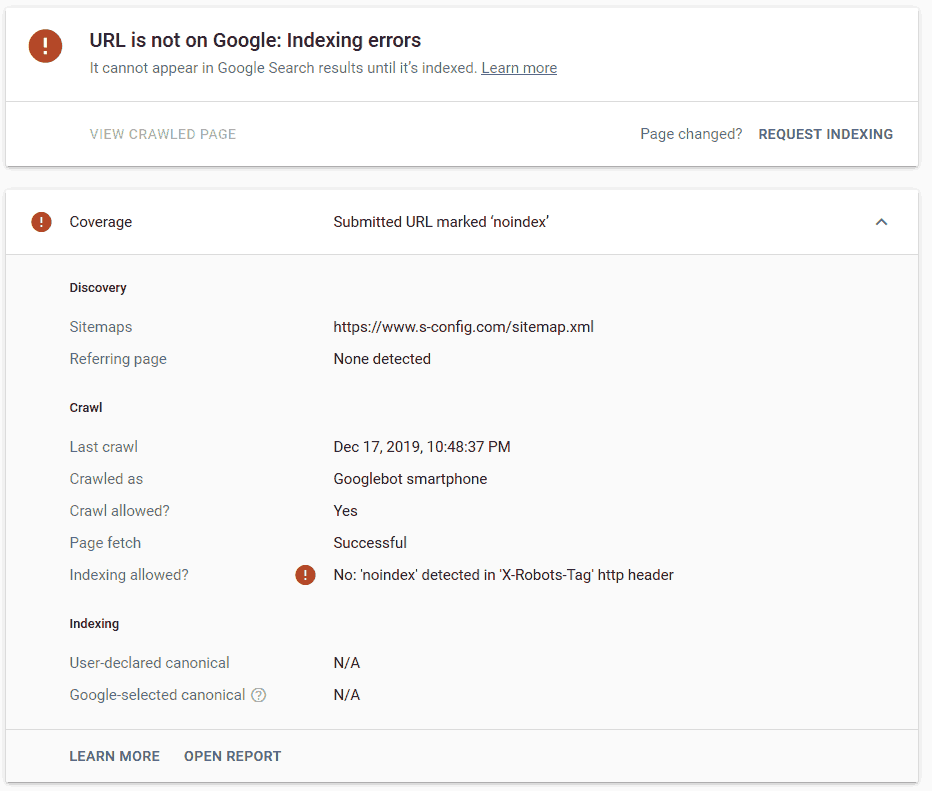

What happens (at least to search engine bots) Is that X-Robots None is like saying X-Robots "NoIndex", "NoFollow". This tells the website search engine community to fuck off! That the term "none" can't be easily handled by many of the crawlers and engines out there. Boy, does it do a great job getting you removed! Within a matter of 48 hours, 80 percent of my site was removed off of Google index like a YouTuber that used Public Domain classical music I was stripped off of the 'known net' from my own devices with the quickness!

add_header X-Robots-Tag "noarchive";

If the X-Robots must do something. It should tell them not to archive the site so that Google can't take away hits to the site by generating a cached mirror of it on their servers for the public. Even when you ask Google Webmaster to scan again it'll take weeks for the damage of misunderstanding the X-Robots to be undone.

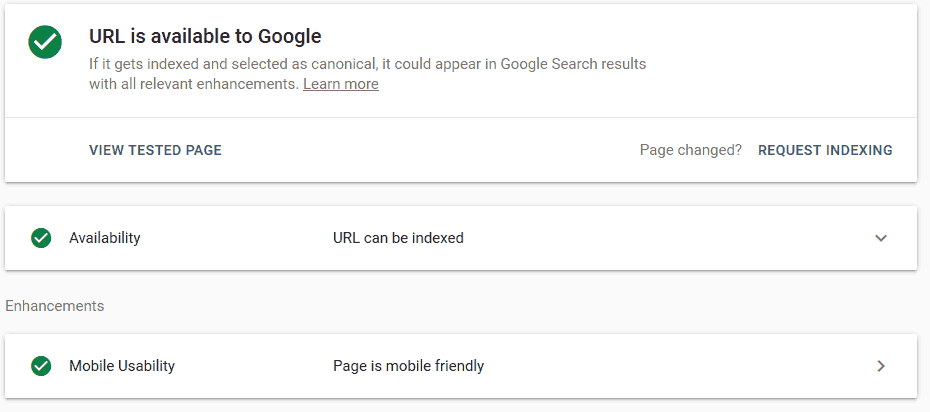

Of course, you could smash that 'live test retry' button at the top of Google Webmaster to make sure the changes went through okay. But to use it to undo the damage is slow/inefficient and it's ultimately better to simply wait for the indexing to happen to verify your entire site of rid of the X-Robot disaster like mine in.

Final thoughts.

When screwing up it's often best to look at the positive that comes out of something. Which we're happy to say that the amount of spam coming into our inbox has dropped sharply to go with the number of search results people find on this site. That when you lose popularity to the lowest common denomination of the internet which is the everlasting swarm of bots and spammers it's not a terrible thing to worry about.

Eventually, it will come back. If other search engines didn't hang off of every site survey that Google. Perhaps even do their own research. The others would come back a lot quicker. All and all it was highly educational. We're left with a much faster website and we gladly accept the consequences for doing so.

We didn't get kicked off of Google Search. We kicked ourselves off! For now, at least!

Until next time. that's what server said.

END OF LINE+++